Game Log 4 - Create

Game Description:

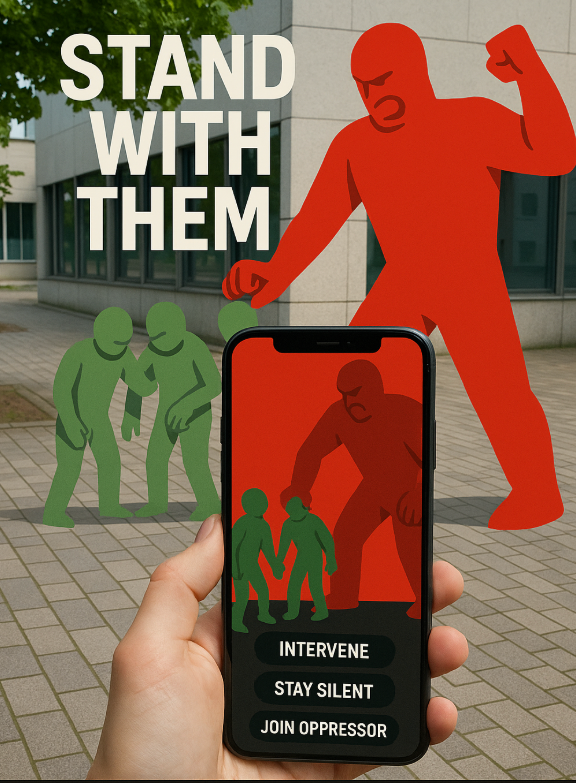

“Stand with Them” is an AR narrative experience that transforms your surroundings into a stage of power and resistance. Inspired by a striking painting of red giants oppressing green figures, the game overlays symbolic scenes of social control onto real‐world spaces and invites you to make moral choices: intervene, stay silent, or side with the oppressors. Each decision reshapes the virtual environment and characters, bringing Europe’s cultural memory of oppression and resistance to life while embodying core EU human rights values. By stepping into the role of an active citizen, you move from bystander to empowered agent, confronting injustice in both the game world and beyond.

Mechanism, European value, creativity:

Our game is similar to a gal game, we promote the game in the form of dialogue, let the players immerse themselves in the details of each group's story. We can reflect the EU's human rights values from the demeanor of the oppressors and submissives of different groups.

This game is closely connected with the CGJ theme and the value of the European Union, emphasizing freedom, equality, and human Right.

a. CREATE

In order to support our project, we adopted several solutions of CREATE and PLAY.

1. Playable Prototypes

We have drawn the entire game design on the paper board, specifically discussed how playable the game is and whether it is easy to understand.

2. 6-8-5 Game Sketching

In this step, each member of the group participated in the process and obtained 5-10 preliminary "culture + value + gameplay" game concepts. We finally conducted a group vote to sort the results and decide on the final plan.

3. Concept Convergence

Based on the 6-8-5 Game Sketching, we carry out a detailed design of the game prototype, clearly writing out the concept name, background story, core gameplay, and value proposition.

b. Process Doucmentation

Intial stage:

In the initial stage of the project, we adopted the simplest design, where the three groups of characters did not include any actions or voice. This version only featured simple modal box interactions. The teaching assistant's suggestion was to enhance the interactivity.

After expert review and test feedback:

Regarding the early stage feedback, experts believe that the interactivity of the game as a whole is insufficient, and we should not just stick to the dialog format. We also want to make the player and the model able to interact directly. Therefore, we tried both poke and grab formats, and enriched the details of the story, adding voice to make the game as a whole even more realistic.

Technical description:

We built the prototype in Unity 2022.3 LTS with OpenXR and Meta XR Building Blocks, relying entirely on the headset’s inside-out tracking and hand tracking. The experience launches in a minimalist StartScene, where a world-space StartUI Canvas offers the description of the game and a “Start” button. When the player taps “Start” (via controller poke), the app loads GameScene and enters placement mode: the controller’s ray lets the player choose a real-world spot on the floor, then three green/red NPC pairs appear around that point.

Once placed, GameScene drives all NPC interactions through a single core script, PersonInteraction. Approaching an NPC pair within a set distance automatically brings up a DialogueUI Canvas at their midpoint (always facing the player), and a SpeechBubble appears above the green NPC’s head (smooth-follow + voice). The player can then use hand poke to tap dialogue options or hand grab to “touch” a green NPC (triggering Help) or a red NPC (triggering Join). Walking away beyond the ignore threshold automatically invokes the Ignore option.

Behind the scenes, a ScriptableObject called InteractionData stores each scene’s text, button labels, audio clips, animation names, and score values. A singleton GameManager tracks completed interactions and cumulative score; when all three NPCs have been handled, it spawns a world-space EndUI Canvas showing the final score, a contextual remark, and Restart/Quit buttons that follow and face the user. This layered setup—separating environment setup, input handling (controller ray, poke, grab, proximity), visual feedback (StartUI, DialogueUI, SpeechBubble, EndUI) and game logic (InteractionData, PersonInteraction, GameManager)—lets us prototype a fully untethered AR experience with intuitive, real-world interactions.

Challenge:

Challenge 1: Enabling "touch" interactions with virtual characters

Our initial attempt used hand pokes via collider triggers in OnTriggerEnter, but tuning collider size and position was error-prone, NPC body shapes varied too much, and physical versus scripted player movement conflicted—distance checks broke when the player moved.

Solution: We switched to Meta XR's Hand Grab Interactable, subscribing to grab events instead. By disabling all Position/Rotation constraints in GrabFreeTransformer, grabs no longer move the NPC but still fire events. We also disabled controller rays on the hands to avoid ray/poke conflicts, binding the grab event in script to our Help/Join methods.

Challenge 2: Stable, context-aware UI and speech bubbles

Initially, both the dialogue panels and bubbles used a per-frame UIFollower, causing them to jitter with headset micro-movements and frame drops.

Solution: We refactored so that only the EndUI uses UIFollower to constantly face the user. In GameScene, DialogueUI is instantiated once at each NPC pair's midpoint, while SpeechBubble employs a SmoothFollow script with Lerp for smooth head-anchored tracking—balancing steadiness with responsiveness.

Challenge 3: Real-world placement of NPCs

Because NPCs originally spawned at fixed start positions, they could be occluded by real furniture or clip through walls.

Solution: We added a placement mode: upon loading GameScene, the player uses a controller ray to point at the floor. Pressing the trigger confirms the location, and the three NPC pairs are instantiated around that point using predefined offsets, ensuring they always appear in visible, unobstructed areas.

c. EXPO Preparation

Before the activity officially begins, we make thorough preparations to ensure participants can fully engage with the experience. To set the context, a carefully designed poster is displayed, providing users with a clear and engaging background introduction that explains the theme and relevance of the activity. This poster not only highlights the basic idea of the game but also outlines the intended mode of expression, helping users understand what to expect and how to interact. Additionally, we present a concrete example of an interactive presentation to visually demonstrate the flow of interaction, allowing participants to grasp the mechanics and objectives more intuitively before they begin.

We hope to show in EXPO:

How games can show the relationship between oppressors and oppressors. How to show this oppressive relationship more clearly, and how to reflect the EU's values of human rights.

Feedback

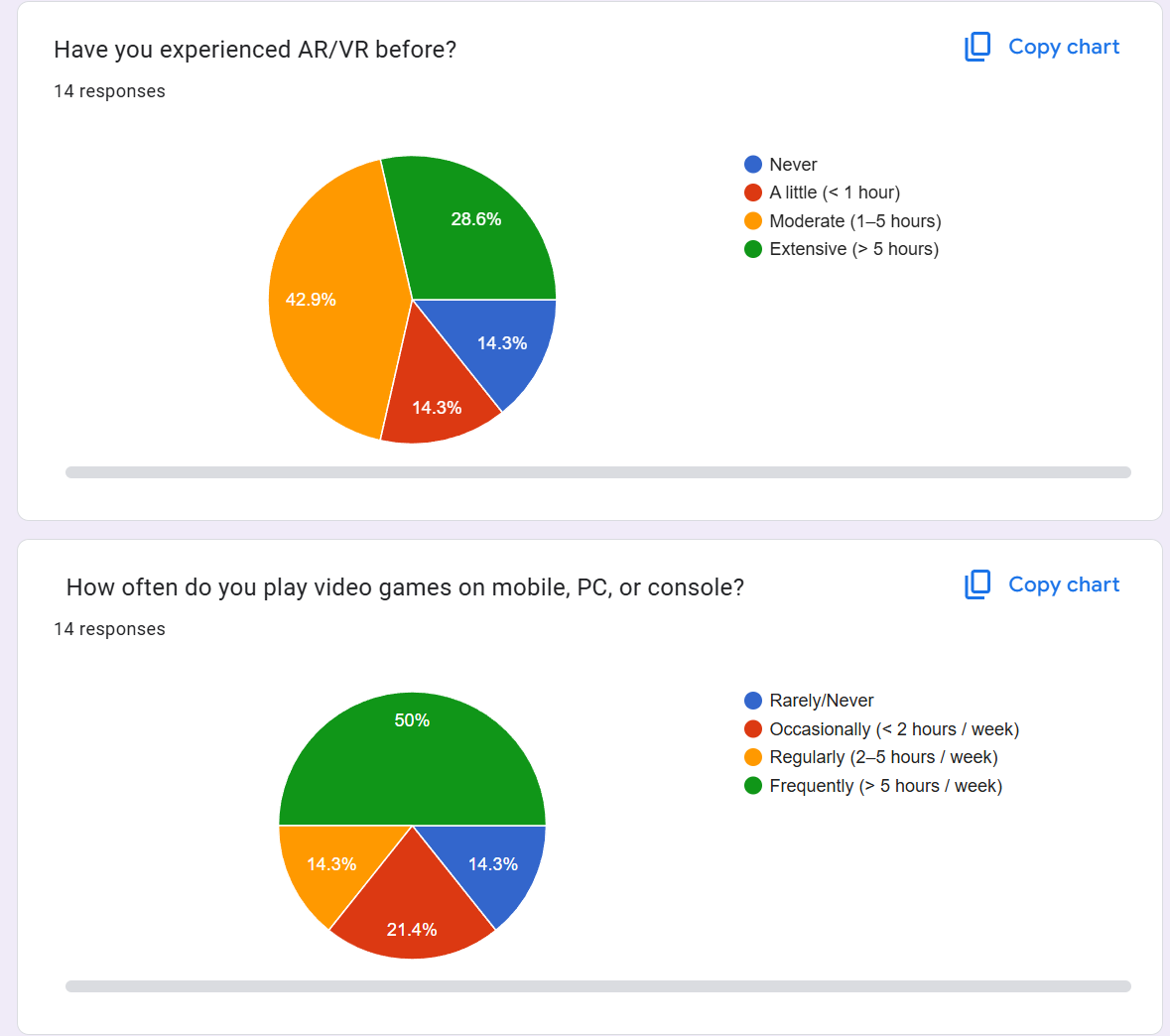

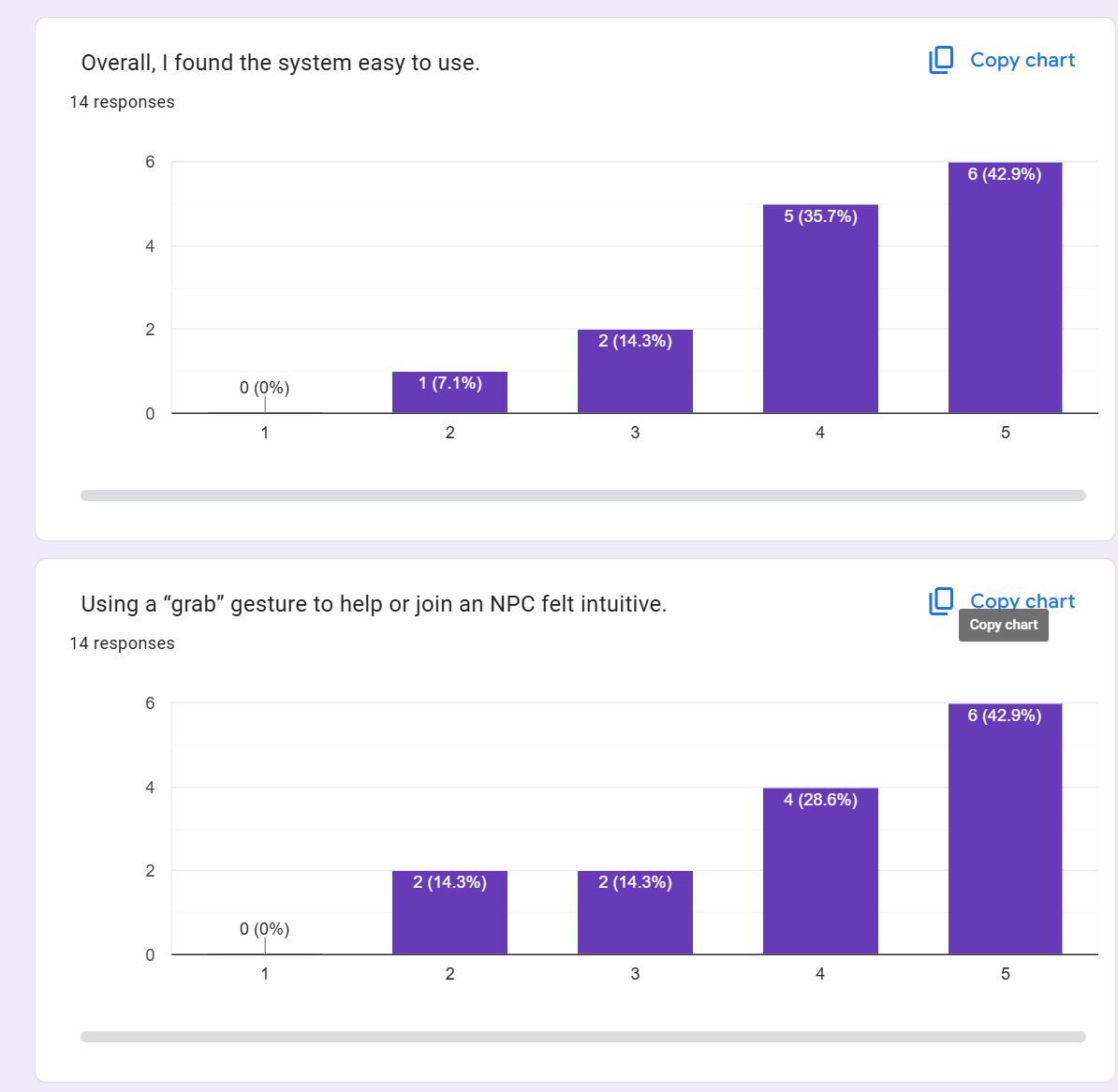

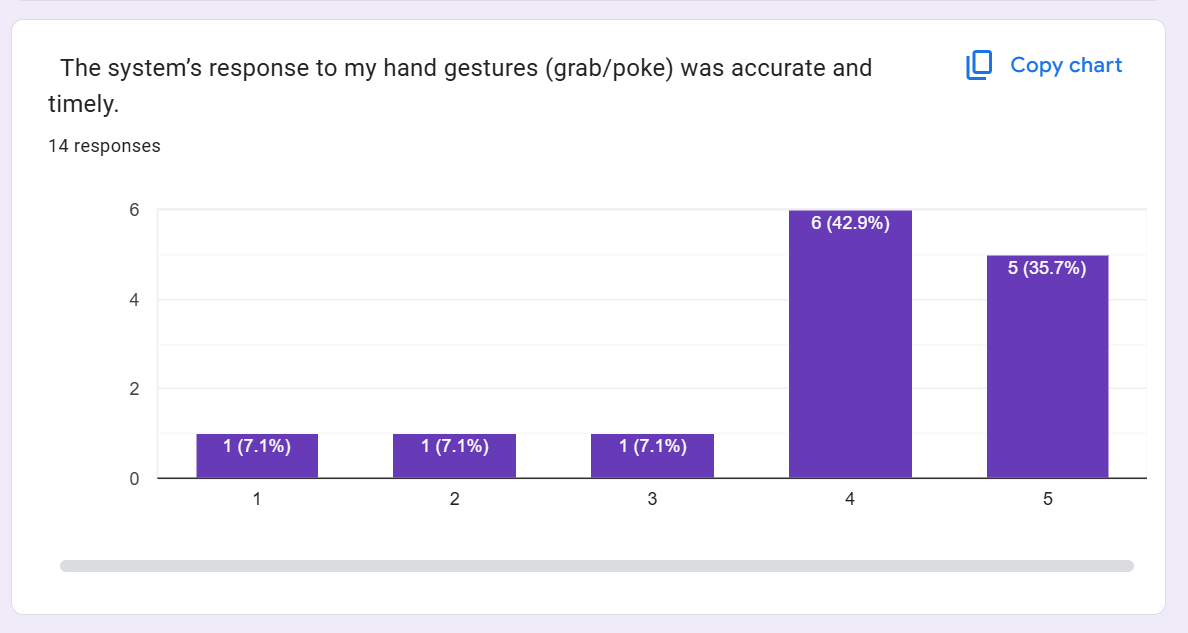

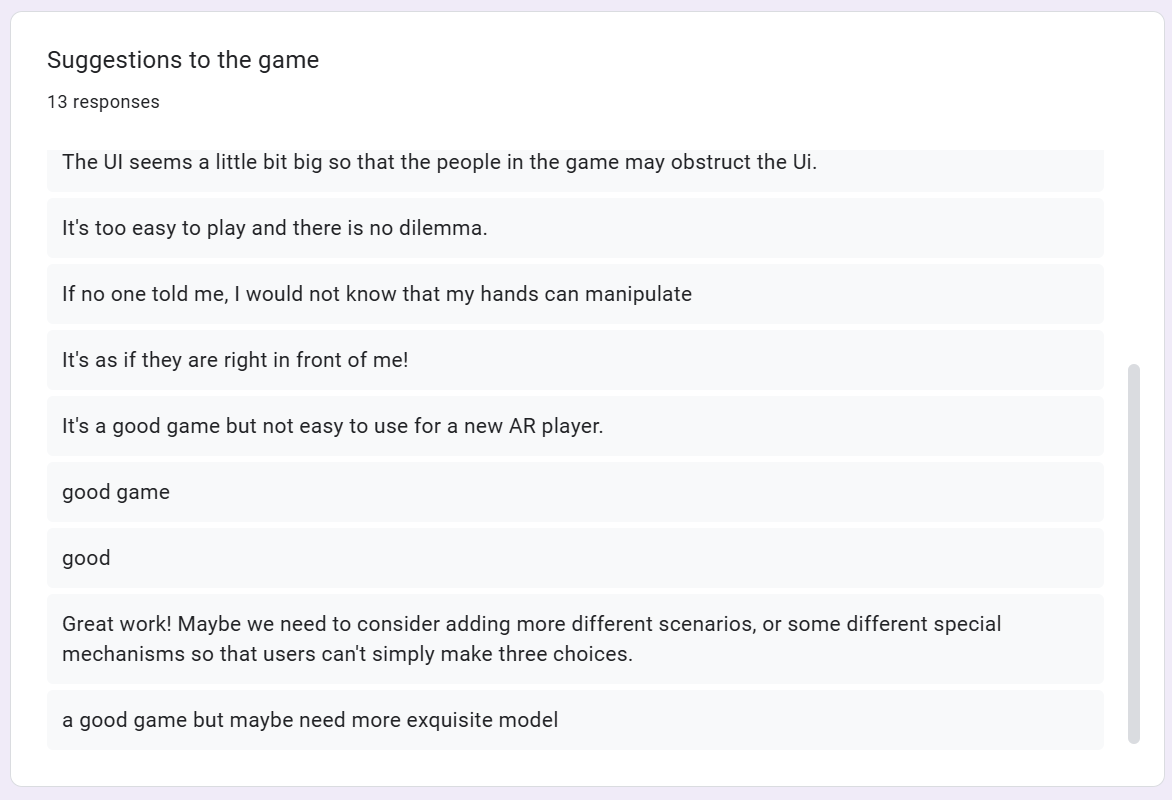

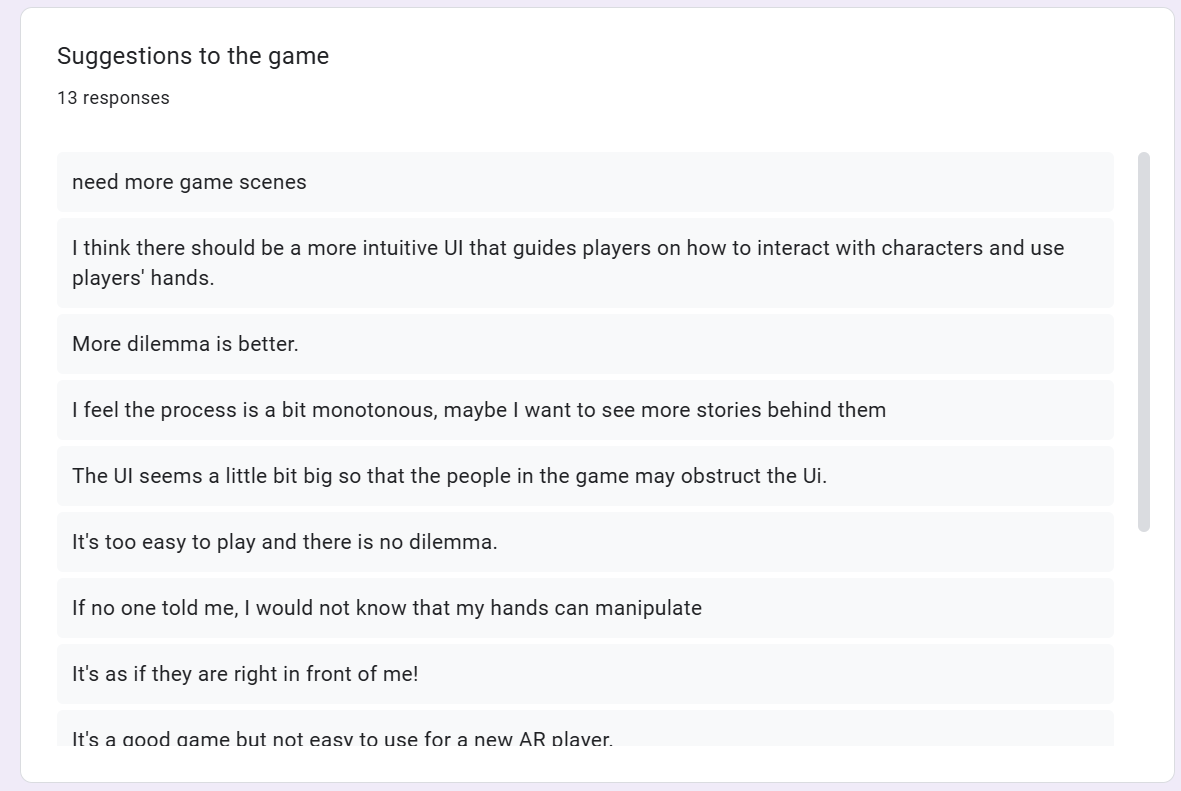

A public form provided by Microsoft. Users are invited to fill it out after playing the game. It includes two user background questions, five level questions, and one open question.

In the two background surveys, more than half of the users have experience using VR/AR. At the same time, half of the users have experience playing games on mobile phones and computers. This is because the EXPO is mainly attended by students from the course, so the basics of playing games are guaranteed.

Based on the five level questions, most users think the game is immersive (85.7%) and fun (71.4%). At the same time, 78.6% of users think the game is easy to use, and more than half of users think that grabbing is intuitive and accurate.

This proves the success of the game design.

Future Work

Although most players' evaluations are acceptable, there are still two core problems. The first is the lack of dilemma, and players can win the game easily. The second point is that there is a lack of guidance at the beginning of the game, and players don't know how to operate at first.

At present, in response to the dilemma problem, I have come up with three improvement plans for game design:

1. Dual-Resource Balance (Compassion vs. Efficiency)

Introduce two interdependent metrics—Compassion and Efficiency—to force trade-offs. Helping actions consume “Energy”: a basic Help costs 2 Energy (+2 Compassion, –1 Efficiency), Ignore costs 0 Energy (+1 Efficiency), and Join costs 1 Energy (+1 Compassion, neutral Efficiency). Both bars are displayed on the HUD. At the end, different endings unlock based on thresholds (e.g. Compassion ≥8 & Efficiency ≥8 for the “Balanced Hero” ending, Compassion ≥8 & Efficiency <8 for the “Overextended Empath,” etc.). Players must strategically allocate their limited resources rather than default to Help every time.

2. Partial Information & Hidden Consequences

Each scene’s DialogueUI hides critical context—e.g. “Why am I being oppressed?” is replaced with “…(information withheld)…”. After selecting Help, a randomized flag may trigger a “trap” outcome: the green NPC turns out to be the villain, deducting extra Compassion or spawning a chasing red NPC. This two-phase reveal (choice → outcome animation → consequence) forces players to weigh risk vs. reward instead of blindly helping.

3. Diverse Help Actions & Hostility Meter

Expand the Help option into three distinct actions with varying costs and effects, and track a new Hostility stat on red NPCs: Assist Task (–2 Energy, +2 Compassion, +0 Hostility), Lead Escape (–3 Energy, +1 Compassion, +1 Hostility), Confront (–4 Energy, +3 Compassion, +3 Hostility).

If a red NPC’s Hostility exceeds a threshold, they become aggressive and may chase or instantly fail the scenario. DialogueUI buttons display each action’s Energy/Compassion/Hostility deltas, creating meaningful dilemmas: should you spend extra resources to subdue hostility, or conserve energy and risk escalation?

At present, I think the third schemes works best. Therefore, I am now adjusting the UI design and the game logic script to achieve improved operations.

Regarding the game guidelines, I will add more guiding UI and text at the beginning of the game to tell players how to operate.

Get AR-WXW-Group 9

AR-WXW-Group 9

| Status | In development |

| Author | GlitterStarWYT |

| Genre | Role Playing, Adventure, Simulation |

| Tags | 2D |

More posts

- Game Log 3 - Imagine69 days ago

- Game Log 1 - Experience99 days ago

- Game Log 2 - Play99 days ago

Comments

Log in with itch.io to leave a comment.

nice